Campbell’s death was as gruesome as the killers’ previous nine known crimes. Found mutilated in a pool of blood at his home in the district of Albany, South Africa, in June 2016, Campbell had been drugged but was likely in pain before he died from his injuries.

Campbell was a white rhinoceros living on a private reserve, and his killing would be the last hurrah of the now notorious Ndlovu Gang. The three poachers were arrested days later at the Makana Resort in Grahamstown, South Africa, caught red-handed with a bow saw, a tranquilizer dart gun and a freshly removed rhino horn. A variety of evidence, including cellphone records and ballistics analysis of the dart gun, would link them to the crime. But a key element was Campbell’s DNA, found in the horn and on the still-bloody saw.

Among the scientific techniques used to combat poaching and wildlife trafficking, DNA is king, says Cindy Harper, a veterinary geneticist at the University of Pretoria. Its application in animal investigations is small-scale but growing in a field with a huge volume of crime: The value of the illegal wildlife trade is as much as $20 billion per year, Interpol estimates.

“It’s not just a few people swapping animals around,” says Greta Frankham, a wildlife forensic scientist at the Australian Center for Wildlife Genomics in Sydney. “It’s got links to organized crime; it is an enormous amount of turnover on the black market.”

The problem is global. In the United States, the crime might be the illegal hunting of deer or black bears, the importing of protected-animal parts for food or medicinal use, the harvesting of protected cacti, or the trafficking of ivory trinkets. In Africa or Asia, it might be the poaching of pangolins, the globe’s most trafficked mammal for both its meat and its scales, which are used in traditional medicines and magic practices. In Australia, it might be the collection or export of the continent’s unique wildlife for the pet trade.

Techniques used in wildlife forensics are often direct descendants of tools from human crime investigations, and in recent years scientists have adapted and tailored them for use in animals. Harper and colleagues, for example, learned to extract DNA from rhinoceros horns, a task once thought impossible. And by building DNA databases — akin to the FBI’s CODIS database used for human crimes — forensic geneticists can identify a species and more: They might pinpoint a specimen’s geographic origin, family group, or even, in some cases, link a specific animal or animal part to a crime scene.

Adapting this science to animals has contributed to major crime busts, such as the 2021 arrests in an international poaching and wildlife trafficking ring. And scientists are further refining their techniques in the hopes of identifying more challenging evidence samples, such as hides that have been tanned or otherwise degraded.

“Wildlife trafficking investigations are difficult,” says Robert Hammer, a Seattle-based special agent-in-charge with Homeland Security Investigations, the Department of Homeland Security’s arm for investigating diverse crimes, including those involving smuggling, drugs and gang activity. He and his colleagues, he says, rely on DNA and other forensic evidence “to tell the stories of the animals that have been taken.”

First, identify

Wildlife forensics generally starts with a sample sent to a specialized lab by investigators like Hammer. Whereas people-crime investigators generally want to know “Who is it?” wildlife specialists are more often asked “What is this?” — as in, “What species?” That question could apply to anything from shark fins to wood to bear bile, a liver secretion used in traditional medicines.

“We get asked questions about everything from a live animal to a part or a product,” says Barry Baker, deputy laboratory director at the US National Fish and Wildlife Forensics Laboratory in Ashland, Oregon.

Investigators might also ask whether an animal photographed at an airport is a species protected by the Convention on International Trade in Endangered Species of Wild Fauna and Flora, or CITES, in which case import or export is illegal without a permit. They might want to know whether meat brought into the US is from a protected species, such as a nonhuman primate. Or they might want to know if a carved knickknack is real ivory or fake, a difference special lighting can reveal.

While some identifications can be made visually, DNA or other chemical analyses may be required, especially when only part of the creature is available. To identify species, experts turn to the DNA in mitochondria, the cellular energy factories that populate nearly every cell, usually in multiple copies. DNA sequences therein are similar in all animals of the same species, but different between species. By reading those genes and comparing them to sequences in a database such as the Barcode of Life, forensic geneticists can identify a species.

To go further to try to link a specimen to a specific, individual animal, forensic geneticists use the same technique that’s used in human DNA forensics, in this case relying on the majority of DNA contained in the cell’s nucleus. Every genome contains repetitive sequences called microsatellites that vary in length from individual to individual. Measuring several microsatellites creates a DNA fingerprint that is rare, if not unique. In addition, some more advanced techniques use single-letter variations in DNA sequences for fingerprinting.

Comparing the DNA of two samples allows scientists to make a potential match, but it isn’t a clincher: That requires a database of DNA fingerprints from other members of the species to calculate how unlikely it is — say, a one-in-a-million chance — that the two samples came from different individuals. Depending on the species’ genetic diversity and its geographic distribution, a valid database could have as few as 50 individuals or it could require many more, says Ashley Spicer, a wildlife forensic scientist with the California Department of Fish and Wildlife in Sacramento. Such databases don’t exist for all animals and, indeed, obtaining DNA samples from even as few as 50 animals could be a challenge for rare or protected species, Spicer notes.

Investigators use these techniques in diverse ways: An animal may be the victim of a crime, the perpetrator or a witness. And if, say, dogs are used to hunt protected animals, investigators could find themselves with animal evidence related to both victim and suspect.

For witnesses, consider the case of a white cat named Snowball. When a woman disappeared in Richmond, on Canada’s Prince Edward Island, in 1994, a bloodstained leather jacket with 27 white cat hairs in the lining was found near her home. Her body was found in a shallow grave in 1995, and the prime suspect was her estranged common-law husband, who lived with his parents and Snowball, their pet. DNA from the root of one of the jacket hairs matched Snowball’s blood. Though the feline never took the stand, the cat’s evidence spoke volumes, helping to clinch a murder conviction in 1996.

$[$PB_DROPZONE,id:knowable-newsletter-article-promo$]$

A database for rhinos

The same kind of specific linking of individual animal to physical evidence was also a key element in the case of Campbell the white rhino. Rhino horn is prized: It’s used in traditional Chinese medicine and modern variants of the practice to treat conditions from colds to hangovers to cancer, and is also made into ornaments such as cups and beads. At the time of Campbell’s death, his horn, weighing north of 10 kilograms, was probably worth more than $600,000 — more than its weight in gold — on the black market.

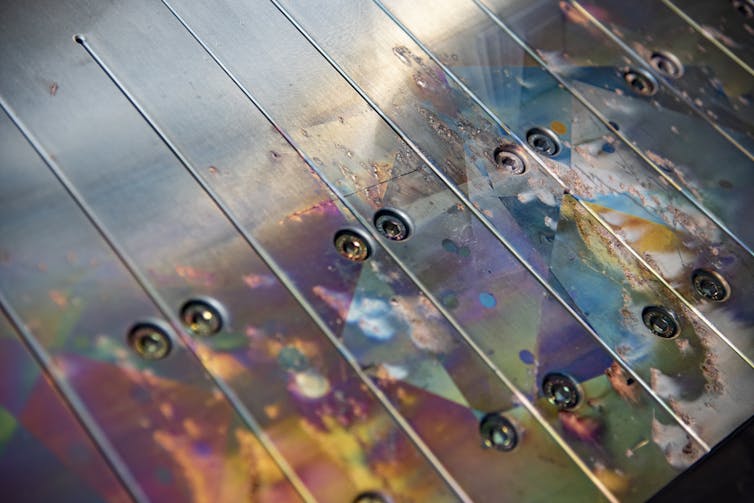

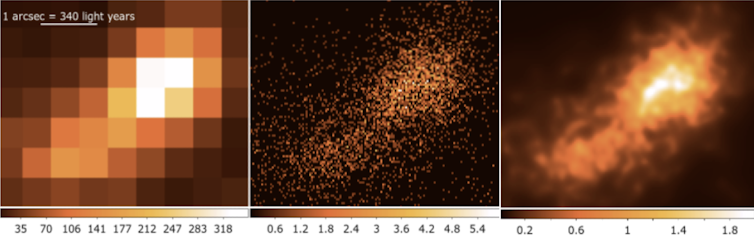

The DNA forensics that helped nab the Ndlovu Gang started with experiments in the early 2000s, when rhino poaching was on the rise. Scientists once thought rhino horns were nothing but densely packed hair, lacking cells that would include DNA, but a 2006 study showed that cells, too, are present. A few years later, Harper’s group reported that even though these cells were dead, they contained viable DNA, and the researchers figured out how to access it by drilling into the horn’s core.

In 2010, a crime investigator from South Africa’s Kruger National Park dropped by Harper’s lab. He was so excited by the potential of her discovery to combat poaching that he ripped a poster describing her results off the wall, rolled it up and took it away with him. Soon after, Harper launched the Rhinoceros DNA Index System, or RhODIS. (The name is a play on the FBI’s CODIS database, for Combined DNA Index System.)

Today, thanks to 2012 legislation from the South African government, anyone in that nation who handles a rhino or its horn — for example, when dehorning animals for the rhinos’ own protection — must send Harper’s team a sample. RhODIS now contains about 100,000 DNA fingerprints, based on 23 microsatellites, from African rhinoceroses both black and white, alive and long dead, including most of the rhinos in South Africa and Namibia, as well as some from other nations.

RhODIS has assisted with numerous investigations, says Rod Potter, a private consultant and wildlife crime investigator who has worked with the South African Police Service for more than four decades. In one case, he recalls, investigators found a suspect with a horn in his possession and used RhODIS to identify the animal before the owner even knew the rhino was dead.

In Campbell’s case, in 2019 the three poachers were convicted, to cheers from observers in the courtroom, of charges related to 10 incidents. Each gang member was sentenced to 25 years in prison.

Today, as rhino poaching has rebounded after a pandemic-induced lull, the RhODIS database remains important. And even when RhODIS can’t link evidence to a specific animal, Potter says, the genetics are often enough to point investigators to the creature’s approximate geographic origin, because genetic markers vary by location and population. And that can help illuminate illegal trade routes.

Elephants also benefit

DNA can make a big impact on investigations into elephant poaching, too. Researchers at the University of Washington in Seattle, for example, measured DNA microsatellites from roving African elephants as well as seized ivory, then built a database and a geographical map of where different genetic markers occur among elephants. The map helps to determine the geographic source of poached, trafficked tusks seized by law enforcement officials.

Elephants travel in matriarchal herds, and DNA markers also run in families, allowing the researchers to determine the relatedness of different tusks, be they from parents, offspring, siblings or half-siblings. When they find tusks from the same elephant or clan in different shipments with a common port, it suggests that the shipments were sent from the same criminal network — which is useful information for law enforcement officials.

This kind of information came in handy during a recent international investigation, called Operation Kuluna, led by Hammer and colleagues at Homeland Security Investigations. It started with a sting: Undercover US investigators purchased African ivory that was advertised online. In 2020, the team spent $14,500 on 49 pounds of elephant ivory that was cut up, painted black, mixed with ebony and shipped to the United States with the label “wood.” The following year, the investigators purchased about five pounds of rhino horn for $18,000. The undercover buyers then expressed interest in lots more inventory, including additional ivory, rhino horns and pangolin scales.

The promise of such a huge score lured two sellers from the Democratic Republic of the Congo (DRC) to come to the United States, expecting to seal the $3.5 million deal. Instead, they were arrested near Seattle and eventually sentenced for their crimes. But the pair were not working alone: Operations like these are complex, says Hammer, “and behind complex conspiracies come money, organizers.” And so the investigators took advantage of elephant genetic and clan data which helped to link the tusks to other seizures. It was like playing “Six Degrees of Kevin Bacon,” says Hammer.

Shortly after the US arrests, Hammer’s counterparts in Africa raided warehouses in the DRC to seize more than 2,000 pounds of ivory and 75 pounds of pangolin scales, worth more than $1 million.

Despite these successes, wildlife forensics remains a small field: The Society for Wildlife Forensic Science has fewer than 200 members in more than 20 countries. And while DNA analysis is powerful, the ability to identify species or individuals is only as good as the genetic databases researchers can compare their samples to. In addition, many samples contain degraded DNA that simply can’t be analyzed — at least, not yet.

Today, in fact, a substantial portion of wildlife trade crimes may go unprosecuted because researchers don’t know what they’re looking at. The situation leaves scientists stymied by that very basic question: “What is this?”

For example, forensic scientists can be flummoxed by animal parts that have been heavily processed. Cooked meat is generally traceable; leather is not. “We have literally never been able to get a DNA sequence out of a tanned product,” says Harper, who wrote about the forensics of poaching in the 2023 Annual Review of Animal Biosciences. In time, that may change: Several researchers are working to improve identification of degraded samples. They might work out ways to do so based on the proteins therein, says Spicer, since these are more resistant than DNA is to destruction by heat or chemistry.

Success, stresses Spicer, will require the cooperation of wildlife forensic scientists around the world. “Anywhere that somebody can get a profit or exploit an animal, they’re going to do it — it happens in every single country,” she says. “And so it’s really essential that we all work together.”